OpenAI CEO Sam Altman admits "there's no proven playbook" after former alignment lead suggests the company prioritizes "shiny products" like GPT-4o over safety

What you need to know

OpenAI has lost a handful of its top executives in the past week after shipping its cutting-edge GPT-4o.

Former employees have expressed concerns over OpenAI big push to achieve the AGI benchmark while placing all safety measures on the back burner.

Reports hitting the windmill indicate OpenAI forces its departing employees to sign NDAs or risk losing the vested equity, to prevent them from criticizing the company or revealing its in-house affairs but CEO Sam Altman has refuted the claims.

OpenAI might be on the brink of perhaps the biggest technological leap with its new flagship GPT-4o model. Who would have thought that we'd get to see an AI chatbot holding a human-like conversation with another AI chatbot? But that doesn't even begin to detail the kind of "magic" OpenAI CEO Sam Altman promises with the new model.

The new flagship model with reasoning capabilities across audio, vision, and text in real-time isn't the only major news stemming from the company. In a surprising turn of events, several high-level OpenAI executives departed from the company shortly after the ChatGPT maker unveiled its GPT-4o model.

On Friday, Jan Leike, OpenAI's Head of alignment, super alignment lead, and executive took to X (formerly Twitter) to announce his departure from the company. He disclosed he joined OpenAI thinking it was the best place in the world to research "how to steer and control AI systems much smarter than us."

Jan disclosed that he'd gotten into disagreements with OpenAI's leadership over its core priorities on next the generations of models, on security, monitoring, preparedness, safety, adversarial robustness, (super)alignment, confidentiality, societal impact, and more. The former OpenAI employee these aspects are hard to get right, and he didn't feel like OpenAI was on the right trajectory to address some of the issues raised.

"Building smarter-than-human machines is an inherently dangerous endeavor," Jan stated. "OpenAI is shouldering an enormous responsibility on behalf of all of humanity." Strangely enough, Jan states that both safety culture and process have been placed on the back burner, prioritizing "shiny products."

Finally, Jan indicated that OpenAI must become a safety-first AGI company for humanity to reap benefits from the feat. An AI researcher already disclosed that there's a 99.99% chance AI will end humanity, according to p(doom). The researcher further disclosed the only way to avoid inevitable doom is to not build it in the first place.

Is the world ready for AGI without guardrails and regulation?

OpenAI top executives, Sam Altman and Greg Brockman acknowledge Jan's remarks outlining several measures that are already in place to help ascertain the safety and progression toward hitting the AGI benchmark, including scaling up deep learning, safe deployment of increasingly capable systems, and more.

However, the executives admitted:

"There's no proven playbook for how to navigate the path to AGI. We think that empirical understanding can help inform the way forward. We believe both in delivering on the tremendous upside and working to mitigate the serious risks; we take our role here very seriously and carefully weigh feedback on our actions."

In the past, Sam Altman has admitted that there's no big red button to stop the progression of AI.

Ilya Sutskever and @signüll were among the first OpenAI employees to depart from the company last week. Details leading up to their departure from the hot startup remain slim with the former indicating that he was leaving the firm to focus on a project that's "personally meaningful." The latter didn't disclose why they were leaving the team either but gave a little bit of insight on OpenAI's internal operations while referring to Sam Altman as a "genius master-class strategist."

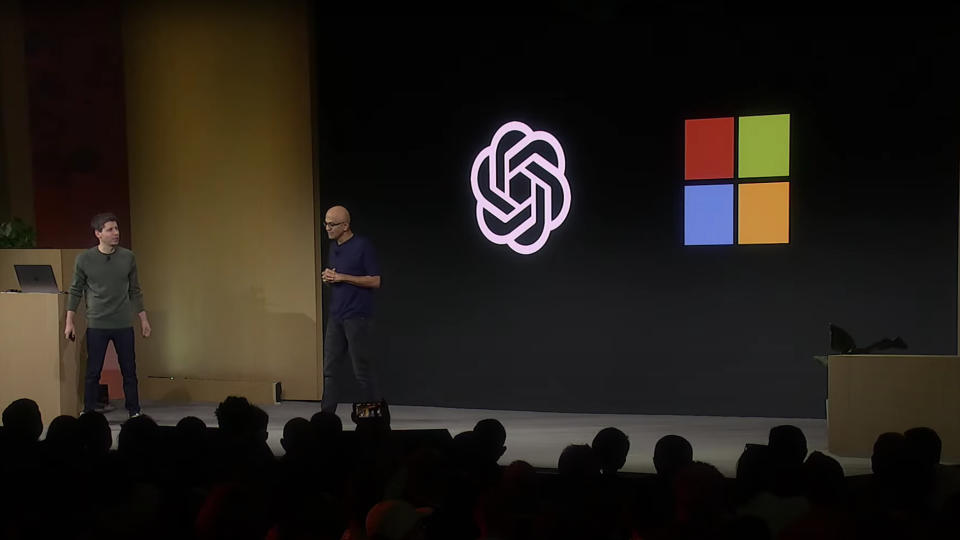

Signüll backed up these claims by citing Altman's strategic deals with Apple and Microsoft, which places OpenAI at a sweet spot with access to infinite computing power, customers, and the possibility of ChatGPT making its way to the greatest piece of technology humanity has ever made, the iPhone. I mean, OpenAI already shipped a dedicated macOS ChatGPT app snubbing Windows. The company explained that it shipped the app to Mac users because it's prioritizing where its clients are, potentially indicating most ChatGPT users use Apple devices to leverage the service's capabilities.

"OpenAI now sits squarely between two of the largest companies in both consumer & enterprise. He forever cemented OpenAI as the defacto name when anyone in the world thinks of “AI” & turned every single OpenAI weakness into a strength—the only guy that could make Google dance & be put in a massively uncomfortable position. Absolutely incredible execution."

The former OpenAI employee also revealed that Altman had reportedly crafted "a very fun clause" in OpenAI's deal with Microsoft. Signüll further stated that Microsoft would be left with zero IP despite its 49% stake in the firm's for-profit arm once it hits the coveted superintelligence AGI benchmark.

Once OpenAI hits the coveted AGI status, Microsoft will have zero IP, and despite its 49% stake, it'll have no control. OpenAI and CEO Sam Altman will reportedly take all the credit.

Ex-OpenAI employees forced to remain tight-lipped

There's been a lot of speculations swirling the air in regard to what is happening to OpenAI behind closed doors that has forced some of its top executives, including the head of alignment and safety, to leave the company abruptly.

While former employees who've departed from the company recently remain quiet about OpenAI's in-house affairs, a report by Vox discloses that OpenAI employees are subjected to nondisclosure and non-disparagement preventing them from criticizing OpenAI or how it runs its operations even after leaving the company. Even admitting that they were subjected to the agreements is a violation of the NDA.

READ MORE: Microsoft's complicated relationship with OpenAI

Employees who refuse to conform to the NDA's terms run the risk of losing their vested equity at OpenAI. Daniel Kokotajio, a former OpenAI employee, corroborates this sentiment, indicating that he has to let go of his vested equity after leaving the hot startup without signing the NDA. He indicated that he left the company “due to losing confidence that it would behave responsibly around the time of AGI.”

However, OpenAI CEO Sam Altman has cleared the air about former employees losing their vested equity upon leaving the company without signing a non-disparagement agreement.

According to Altman:

"There was a provision about potential equity cancellation in our previous exit docs; although we never clawed anything back, it should never have been something we had in any documents or communication. This is on me and one of the few times I've been genuinely embarrassed running OpenAI; I did not know this was happening and I should have."

Altman further indicated that OpenAI has never held back any employee's vested equity for not signing a separation agreement. He added that there's a team that's working on fixing the standard exit paperwork.