The iPad’s Handwriting Recognition Shows How Apple Does Machine Learning

The more intuitive a task is for humans, generally, the harder it is for artificial intelligence. Think of when Alexa can’t hear your commands, or when your spam filter traps an important email. A computer’s ability to read handwriting, then translate it into letters and numbers it can understand, has been a challenge going back decades. Think of the hit-or-miss capabilities of the Windows Transcriber in the early 2000s, or the PalmPilot in the late ’90s. Handwriting is so nuanced that just analyzing a static letter’s shape doesn’t work.

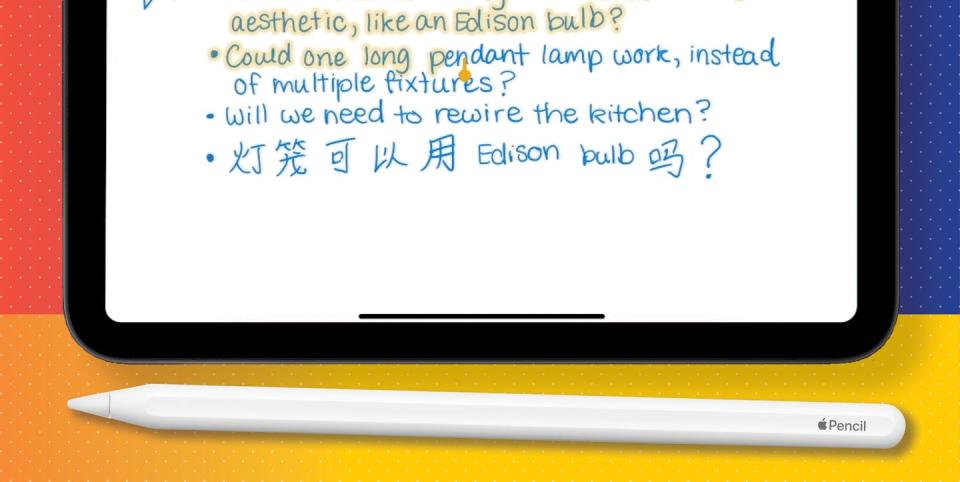

Apple, it seems, found a solution. In the newest update to iPadOS, when you write with the Apple Pencil ($129), the iPad can understand your scrawl and, with Scribble, convert it to typed text. It works like most machine learning—examples inform rules that help predict and interpret a totally new request—but taps into a smarter data set and greater computing power to do what had stumped generations of previous machines. While Alexa and Siri rely on a connection to faraway data centers to handle their processing, the iPad needs to be able to do all that work on the device itself to keep up with handwriting (and drawing—machine learning also helps the Notes app straighten out an imperfect doodle of a polygon, for example). That takes way more effort than you’d think.

“When it comes to understanding [handwriting] strokes, we do data-gathering. We find people all over the world, and have them write things,” says Craig Federighi, senior vice president of software engineering at Apple. “We give them a Pencil, and we have them write fast, we have them write slow, write at a tilt. All of this variation.” That methodology is distinct from the comparatively simple approach of scanning and analyzing existing handwriting. Federighi says that for Apple’s tech, static examples weren’t enough. They needed to see the strokes that formed each letter. “If you understand the strokes and how the strokes went down, that can be used to disambiguate what was being written.”

That dynamic understanding of how people write means Apple’s software can reliably know what you’re writing as you’re writing it, but combined with data on a language’s syntax, the iPad can also predict what stroke or character or word you’ll write next. The massive amount of statistical calculations needed to do this are happening on the iPad itself, rather than at a data center. “It’s gotta be happening in real time, right now, on the device that you’re holding,” Federighi says. “Which means that the computational power of the device has to be such that it can do that level of processing locally.”

The use cases for all that processing: You’re handwriting notes on your iPad ($329 and up) with the Pencil during a meeting, and you want to see a map of Zanzibar. You can now swipe to the Maps app and write “Zanzibar” into the search field, rather than pecking at the screen’s keyboard. Or, you want to email a few lines of those handwritten notes. You select that section, copy, then paste into an email, where it shows up as if you typed them. Or you write down a phone number, and you can tap to call it.

If you buy an Apple Pencil, iPadOS 14 brings an additional method of input, along with speech and the keyboard, to communicate with the iPad. The use-case is narrow, but it’s a digital bridge for handwriting die-hards, and easier than carrying a keyboard with your tablet. It works so well it makes translating your writing into functional text feel like a natural behavior. This is the kind of novelty Apple introduces often, one that feels so organic, you’ll look for excuses to use it.

You Might Also Like