IBM Is Ending Facial Recognition to Advance Racial Justice Reform

In a June 8 letter to Congress, IBM CEO Arvind Krishna said the company would halt all facial recognition research, plus the sale of existing related software.

Police departments have access to these tools thanks to firms like Clearview AI, and IBM is questioning whether or not those interventions are necessary.

Algorithms used in facial recognition software have a long history of racial bias.

IBM will immediately halt all facial recognition research and stop all sales of its existing related software, according to a letter CEO Arvind Krishna sent to Congress on Monday.

"IBM no longer offers general purpose IBM facial recognition or analysis software," Krishna wrote. "IBM firmly opposes and will not condone uses of any technology, including facial recognition technology offered by other vendors, for mass surveillance, racial profiling, violations of basic human rights and freedoms, or any purpose which is not consistent with our values and Principles of Trust and Transparency. We believe now is the time to begin a national dialogue on whether and how facial recognition technology should be employed by domestic law enforcement agencies."

The move comes during the third week of Black Lives Matter protests in the U.S., sparked by the police killing of George Floyd, a black man living in Minneapolis, on May 25. Authorities monitoring those demonstrations have access to facial recognition tools like the controversial Clearview AI platform, and IBM is grappling with whether or not police should be able to use the software.

Clearview AI fell under heavy scrutiny earlier this year after The New York Times published an exposé that alleged the Federal Bureau of Investigation—and hundreds of other law enforcement offices—contracted with the firm for surveillance tools. Clearview AI features a database of over 3 billion images scraped from websites like Facebook, Twitter, and even Venmo, meaning your face may appear in the log, even without your permission.

These software tools also have a long history of algorithmic bias, which means AI misidentifies human faces due to race, gender, or age, according to a December 2019 report from the National Institute of Standards and Technology. That study found "the majority of face recognition algorithms exhibit demographic differentials."

The NIST study evaluated 189 software algorithms from 99 developers, representing a majority of the tech industry invested in facial recognition software. Researchers put the algorithms up to two tasks: a "one-to-one" matching exercise, like unlocking a smartphone or checking a passport; and a "one-to-many" scenario, wherein the algorithm tests one face in a photo against a database.

Some of the results were disturbing. In the one-to-one exercises, NIST noticed a higher rate of false positives for Asian and African American faces when compared with white faces. "The differentials often ranged from a factor of 10 to 100 times, depending on the individual algorithm," the authors noted. They also saw higher rates of false positives for African American women, which is "particularly important because the consequences could include false accusations."

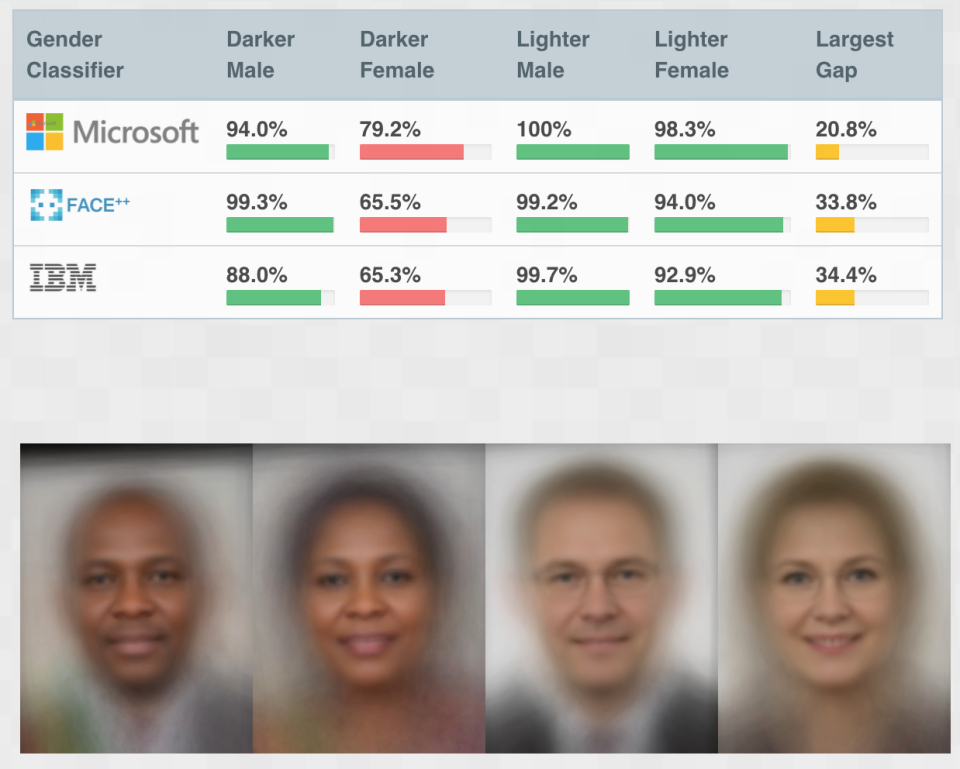

A January 2018 study, meanwhile, evaluated three commercial facial recognition software platforms from IBM, Microsoft, and Face++. In identifying faces by gender, each offering appeared to perform well, but upon closer inspection, the study authors found rampant bias. For one thing, the companies identified male faces with far more accuracy, representing an 8.1 percent to 20.6 percent differential.

That chasm widened further still in identifying dark-skinned female faces. "When we analyze the results by intersectional subgroups—darker males, darker females, lighter males, lighter females—we see that all companies perform worst on darker females," the authors said. IBM and Face++ only correctly identified those faces correctly about 65 percent of the time. As a result of that study, IBM released a statement about its Watson Visual Recognition platform, promising to improve its service.

To be clear, the algorithmic bias does not exist in a research vacuum, but has already made its way into the real world. One of the most damning examples comes from a 2016 ProPublica analysis of bias against black defendants in criminal risk scores, which are supposed to predict future recidivism. Instead, ProPublica found the formula used by courts and parole boards are "written in a way that guarantees black defendants will be inaccurately identified as future criminals more often than their white counterparts."

And just this week, Microsoft's AI news editor, which is meant to replace the humans running MSN.com, mistakenly illustrated a story about British pop group Little Mix. In the story, singer Jade Thirlwall reflected on her own experience with racism, but ironically the AI chose a photo of the band's other mixed-race member, Leigh-Anne Pinnock.

"Artificial intelligence is a powerful tool that can help law enforcement keep citizens safe," Krishna went on to say in his letter to Congress. "But vendors and users of Al systems have a shared responsibility to ensure that Al is tested for bias, particularity when used in law enforcement, and that such bias testing is audited and reported."

There's no telling if other companies that sell facial recognition software will follow suit, but it's unlikely any firms that specialize in the space, like Face++, would do so. Given Big Tech's silence amid the George Floyd protests, however, IBM's decision to take itself out of the equation is a step in the right direction.

You Might Also Like