ChatGPT and Bing AI might already be obsolete, according to a new study

What you need to know

A new study highlights how scientists are potentially on the verge of a breakthrough.

The new technique dubbed Meta-learning for Compositionality (MLC), has the capability to make generalizations about language.

Per benchmarks shared, neural networks could potentially outperform AI-powered chatbots like Bing Chat and ChatGPT, which also leverage neural network capabilities.

When presented with certain tasks, the neural network was able to replicate similar results, whereas the GPT-4 model struggled to accomplish these tasks.

The study claims that the new design is able to understand and use new words in different settings better than ChatGPT.

As companies continue putting more effort into AI to improve the technology, scientists have seemingly created a a discovery that might supersede generative AI's capabilities.

Per the report in Nature, scientists refer to the technique as Meta-learning for Compositionality (MLC). They further indicated that it has the capability to make generalizations about language. Moreover, scientists claim that it might be just as good as humans, especially when folding new words and applying them in different settings and contexts, ultimately presenting a life-like experience.

When put to the test and compared to ChatGPT (which leverages neural network technology to understand and generate text based on the user's prompts) the scientists concluded that the technique and humans performed better. This is despite the fact that chatbots like ChatGPT and Bing Chat are able to interact in a human-like manner and serve as AI-powered assistants.

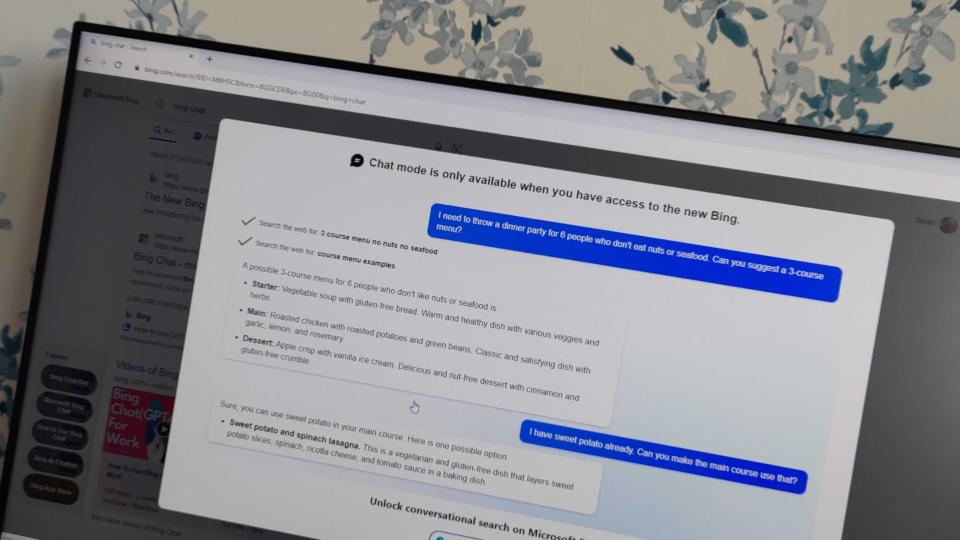

According to Nature's report, there's a huge possibility that the new design could outwit AI-powered chatbots in the long run as it can interact with people more naturally compared to existing systems. Looking back, Microsoft's Bing Chat was spotted hallucinating during the initial days of its launch, though the issue was fixed.

Paul Smolensky, a scientist specializing in language at Johns Hopkins University in Baltimore, Maryland, stated that the technique is a "breakthrough in the ability to train networks to be systematic."

How does neural network work?

As highlighted above, a neural network is a type of artificial intelligence with the ability to fold new words and use them in different settings like humans. The only difference is that the technology must first undergo vigorous training to master the word and how to use it in different settings.

To determine the capability of the technology, the scientists ran several tests on humans by exposing them to new words and gauging their understanding of how well they were able to use the words in different contexts. They also tested their capability to link the newly learned words with specific colors. As per the benchmark shared, 80% of the people who participated in the exercise excelled and could relate the words with the colors.

The scientist used the same premise to train a neural network. However, they configured it to learn from its own mistakes. The goal was to allow the system to learn from every task it completed rather than using static data. To ensure that the neural network portrayed human-like characteristics, the scientists trained the model to reproduce similar errors to the ones made by those who took a similar test. Ultimately, this allowed the neural network to respond to a fresh batch of questions almost (if not perfectly) like humans.

GPT-4, on the other hand, took quite some time to make sense of the tasks presented to it. Even then, the results were dismal compared to humans and the neural network, where it averaged between 42 and 86 percent, depending on the tasks presented. Put incredibly simply, the issue with GPT and other similar systems is that they simply mimic intensely complex syntax, rather than demonstrate a true understanding of context. This is what leads GPT and similar models down hallucinogenic rabbit holes — humans are more capable of self-correcting anomalies like this, and neural networks may be more capable of doing so as well.

While this potentially proves that a neural network could be the next best thing after generative AI, a lot of testing and studies need to be done to assert this completely. It will be interesting to see how this plays out and how it reshapes systematic generalization.

What does the future hold for ChatGPT and Bing Chat?

There's no doubt about generative AI's power and potential, especially if its vast capabilities are fully explored and put to good use. This is not to say that the technology is not achieving amazing feats already. Recently, a group of researchers proved that it's possible to successfully run a software company using ChatGPT and even generate code in under seven minutes for less than a dollar.

While impressive, the generative AI faces its fair share of setbacks. For instance, the exorbitant cost implication required to keep it going, not forgetting the amount of cooling water and energy it consumes. There have also been reports of OpenAI's AI-powered chatbot, ChatGPT, losing accuracy and its user base declining for three months consecutively. Bing Chat's market share has also stagnated despite Microsoft's heavy investment in the technology.

Do you think neural network will eventually shadow AI-powered chatbots like ChatGPT and Bing AI? Share your thoughts with us in the comments.