Calm down, Adobe tells fans – Photoshop’s new small print isn’t as controversial as it looks

Adobe has been under fire lately, having been called out for its "shocking dismissal of photography" by the American Society of Media Photographers for some tone-deaf Photoshop ads it ran a few weeks ago. And now the software giant has been forced to defend itself again, after a social media outcry over some new Photoshop terms and conditions that started rolling out this week.

Over the past few days, a number of high-profile Photoshop users have expressed their dismay on X (formerly Twitter) about a new 'Updated Terms of Use' pop-up that they've been forced to accept. The new small print contains some seemingly alarming lines, including one that states "we may access your content through both automated and manual methods, such as for content review".

Adobe has now defended the new conditions in a new blog post. In short, Adobe claims that the slightly ambiguous legalese in its new small print has created an unnecessary furore, and that nothing has fundamentally changed. The two key takeaways are that Adobe says it "does not train Firefly Gen AI models on customer content" and that it will "never assume ownership of a customer's work".

On the latter point, Adobe explains that apps like Photoshop need to access our cloud-based content in order to "perform the functions they are designed and used for", like opening and editing files. The new terms and conditions also only impact cloud-based files, with the small print stating that "we [Adobe] don’t analyze content processed or stored locally on your device".

Adobe does also admit that its new small print could have been explained better, and also stated that "we will be clarifying the Terms of Use acceptance customers see when opening applications". But while the statement should help to allay some fears, other concerns will likely remain.

One of the main points raised on social media was concern about what Adobe's content review processes mean for work that's under NDA (Non-Disclosure Agreement). Adobe says in its statement that for work stored in the cloud, Adobe "may use technologies and other processes, including escalation for manual (human) review, to screen for certain types of illegal content".

That may not completely settle the privacy concerns of some Adobe users, then, although those issues are arguably applicable to using cloud storage in general, rather than Adobe specifically.

A crisis of trust?

This Adobe incident is another example of how the aggressive expansion of cloud-based services and AI tools is contributing to a crisis of trust between tech giants and software users – in some cases, understandably so.

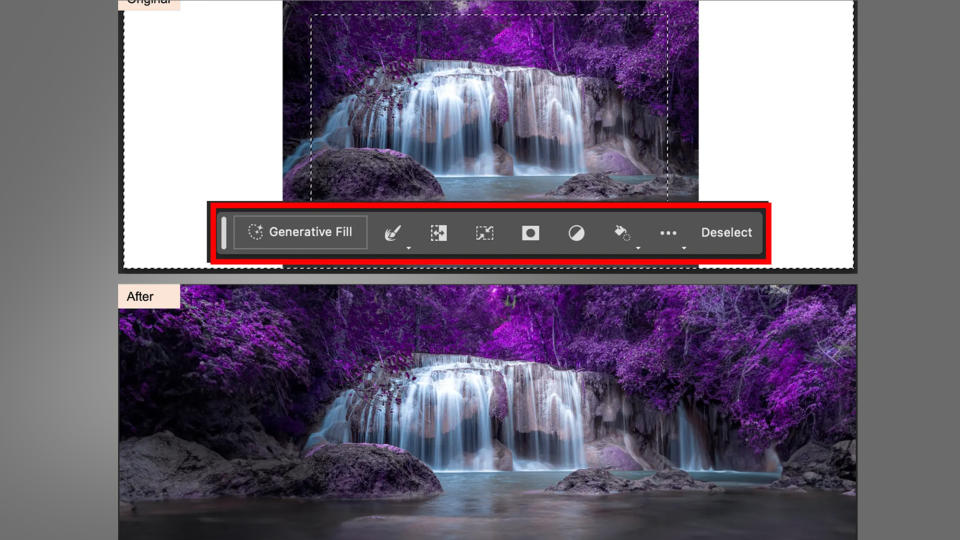

On one hand, the convenience of cloud storage has been a massive boon for creatives – particularly for those with remote teams spread across the world – and AI tools like Generative Fill in Photoshop can also be big time-savers.

But they can also come at a cost, and it remains the case that the only way to ensure true privacy is store your work locally rather than in the cloud. For many Photoshop users, that won't be an issue, but the furore will still no doubt see some looking for the best Photoshop alternatives that don't have such a big cloud component.

As for AI tools, Adobe remains the self-appointed torch-bearer for 'ethical' AI that isn't trained on copyrighted works, though it's landed in some controversies. For example, last month the estate of legendary photography Ansel Adams accused Adobe on Threads of selling AI-created imitations of his work.

In fairness to Adobe, it removed the work and stated that it "goes against our Generative AI content policy". But it again shows the delicate balancing act that the likes of Adobe are now in between rolling out powerful new AI-powered tools and retaining the trust of both users and creatives.